This is a story spanning two decades of setbacks and growth as a designer and instructor.

This is a story of two artificial intelligences (AI) trained on the collective media output of human history.

/imagine a street –ar 2:1 –video

In the beginning there is only noise and desire. We see blurry shapes, or hear vague sounds, but do not understand their meaning. We are hungry, tired, or bored. Over days, then months, then years, we begin to recognize familiar shapes and sounds. Our brains are forming the neural pathways that tell us certain patterns in time and space are significant and produce results while others do not. We are able to discern the signal from the noise.

Over the last few years, there has been tremendous innovation in using machine learning to understand natural language and to translate between text and images. One of the ways this has been accomplished is through latent diffusion[1]: a process by which an AI model is trained to destroy a set of images by applying noise and then learning how to reconstruct new images from that noise. By combining the image data with text captions from those original images, the model begins to perceive patterns in the noise, and to iteratively refine the noise towards a novel image guided by a text prompt.

/imagine a street

!dream a street

We start with some streets. Not the right streets, but they will do for now. These streets don’t exist and are complete fabrications. But that’s okay, too. Architects are used to working with fictional images.

On the left is the output of Midjourney[2], and on the right is the output of Stable Diffusion[3]. Both are two of the many AI text-to-image generators, launched in the last year, that use diffusion. Both interfaces currently require a keyword to initiate the image generation. For Midjourney the keyword is "/imagine" and for Stable Diffusion it is "!dream." After the keyword is a “prompt” used to guide the direction of the denoising process.

/imagine a street

!dream a street -n 4 -g -S 2240456558

Still not the streets we are looking for. But our prompt isn’t very specific. We could keep on refreshing and get an infinite number of nonexistent streetscapes. Let that sink in: we now have tools that may create more artificially generated images this year than the sum total of all human-generated imagery throughout history. If you feel awash in AI images now, wait a few years until all games, shows, and movies are generated with AI images.

We can choose one of the Midjourney images to “upscale” or we can ask for more variations. Here, I’ve selected the bottom-right image from generation 1 for more variations. The images are very similar to the previous image, but there are subtle variations. Each of the four images started from slightly different noise fields, so they evolved similarly, but not exactly the same. Computationally generated randomness often uses a base number, called a seed, that should allow the user to recreate that exact randomness (a list of numbers or, in this case, a noise field) everytime it is used.

However, it’s still a bit of a mystery what’s going on in Midjourney at this point in relation to seeds: using the same seed will produce a similar starting field of noise, but it will not be identical and thus the denoising process does not produce an identical result.

Seeds are important in Stable Diffusion,but unlike in Midjourney, they do recreate the exact image each time. Here, I have asked Stable Diffusion to make four variations of “a street” and used the original image’s seed (2240456558), so I have not only the original image, but three new variations as well.

/imagine a street

!dream a street -W 1024 -H 1024 -S 4294061595

Now we’re getting somewhere. The images contain the details and textures that evoke the messy urban condition that we often leave out of our design visions. The puddles, dirt, signs, and random infrastructure are still fictions, but somehow they are more real than most architectural renderings.

Within Midjourney, I'll choose the top-right image to upscale to 1024 × 1024 pixels. As it upscales, new details are added by the AI, but the same basic composition remains. By changing the “-W” and “-H” parameters in Stable Diffusion, it also produces a larger image at 1024 × 1024 pixels. However, it doesn’t keep the same visual identity, despite using the same seed as the previous generation's smaller images (gen 2 bottom-left). Instead, it creates an entirely new composition at a larger resolution.

/imagine a street in Osaka --seed 15965

!dream a street in Osaka -W 1024 -H 1024 -S 4294061595

In 1998, I was very lucky to receive a fellowship that allowed me to live and study in Kyoto, Japan. I was burnt out after five years of my first architecture degree and was considering changing careers. For the first few months in Japan, I wandered the streets of the three primary cities of the Kansai region (Osaka, Kyoto, and Kobe), looking for something to inspire me about architecture again.

Even small changes in a prompt can cause large changes in the generated image. Midjourney is more sensitive to this as their algorithm is nondeterministic. Using the same prompt and seed will always result in a slightly different image. Stable Diffusion’s algorithm is deterministic in the sense that if you use the same prompt, seed, and all other parameters, you will always get the same result. Both deterministic and nondeterministic approaches have their advantages. With Stable Diffusion, it is easier to understand the impact of an additional word added to the prompt as we see here between generation 3 and 4. The overall composition is similar, but the addition of “in Osaka” has generated numerous vertically-oriented signs hanging on the buildings. On the other hand, a nondeterministic model feels more liberating, as you know you can’t control everything. You just need to breed better images through your selection process.

/imagine a street in Osaka in sunlight --seed 15965

!dream a street in Osaka in sunlight -W 1024 -H 1024 -S 4294061595

I had the ultimate privilege of not having anything to do. The fellowship paid for my food and housing, and all they expected was a report on my activities a year later. It was the opposite of architecture school as I had no deadlines or pressure. I began taking long walks without a map (pre-smartphone!). I would get lost and then spend the day finding my way home.

AI models start from a set of pre-existing image and text pairs. These training sets can consist of millions or even billions of image-text pairs. Stable Diffusion was trained on the LAION-2B dataset[4] which contains 2.32 billion pairs of images, with English captions, scraped from the internet. This immediately presents the issue of access and bias in the image generation, as it will perform better with English prompts. Midjourney hasn’t released data about their training sets, but it is likely similar, since most AIs are using the same base training sets that have been made publicly available.

/imagine a photo of a street in Osaka in sunlight --seed 9173

!dream a photo of a street in Osaka in sunlight -W 1024 -H 1024 -S 4294061595 -n 4

I was tired of making architecture. I didn’t want to design anything. I just wanted to walk around looking at buildings that were somehow both completely banal and exquisite. There was nothing particularly special about the architecture. Of course, there were gems by Tadao Ando, Shin Takamatsu, and Hiroshi Hara, that I would visit along with the phenomenal traditional temples and shrines, but like any city, the vast majority were anonymous and generic buildings that showed the marks of weather and time.

Stable Diffusion is much more consistent between generations when using the same prompt and seed than Midjourney. The last few generations of results from Stable Diffusion were almost the same, so by adding the “-n 4” parameter, three new variations appear in addition to the original version in the top-left. With Midjourney, changing the prompt while keeping the same seed still results in images that are quite different from each other. Each variation of an initial prompt automatically uses a new seed.

/imagine a photo of a in Osaka in sunlight --seed 14796

!dream a photo of a street in Osaka in sunlight -W 1024 -H 1024 -S 1230306086

Each city had its own unique character. I bought my first digital camera, and a bike so I could cover more ground. My new Fujifilm FinePix 700 had a whopping 1.5 megapixel resolution. That's similar to the resolution that both Midjourney and Stable Diffusion produce now, but these AIs will evolve quickly just like our digital cameras' resolution has skyrocketed.

You might be wondering at this point why Midjourney’s images look so much more colorful and dynamic than Stable Diffusion. There are at least three contributing factors. First, we don’t really know the content of Midjouney’s training data. It’s possible that it contains more images from art history, so it is more “experienced” in creating more “artistic” and less "photorealistic" images. Second, the programmers could have used the exact same training data, but weighted the artistic images higher so they have more influence. For example, one of the subsets of the LAION-5B is LAION-Aesthetics, a 120 million set of images that have been algorithmically determined to have higher “aesthetic” values. The definition of what a machine believes is beautiful remains unclear, but that subset of images could have been weighted higher in the training.

However, the third reason is perhaps the most interesting, and is also the reason for recent improvements in quality given by Midjourney's CEO. In an interview, he stated “Our most recent update made everything look much, much better, and you might think we did that by throwing in a lot of paintings [into the training data]. But we didn’t; we just used the user data based off of what people liked making [with the model]. There was no human art put into it[5]”. In other words, Midjourney users’ data—their selections—are being fed back into the AI model, so the AI model is learning to cater to and from our desires.

/imagine a photo of a construction site in Osaka in sunlight --seed 14796

!dream a photo of a construction site in Osaka in sunlight -W 1024 -H 1024 -S 1230306086

In my wanderings, I started to notice the amount of construction taking place. Coming from New Orleans, construction was an uncommon sight. Although Japan was in a recession at the time, it was building more than the booming United States economy. But New Orleans is a special case: even in the best of times, it seems to be losing ground and sinking slowly into the Mississippi. I don't remember any buildings being built in my five years there, while in Kyoto, a city that had survived 1000 years, there were five buildings under construction just on my block.

Midjourney’s artistic bent can most clearly be seen in its color palette. Although it can produce more muted images, it defaults to a dynamic range of colors with even the simplest of prompts. This isn’t just any “sunlight” in the left image, it is the most glorious sunlight ever witnessed. This presents a problem for the user, as the AI is always trying to create the heroic image of the prompt—and the user must actively tamp down Midjourney's dramatic tendencies.

/imagine a photo of a building wrapped in tarps in Osaka in sunlight --seed 14796

!dream a photo of a building wrapped in tarps in Osaka in sunlight -W 1024 -H 1024 -S 1123249968

Unlike the construction sites that I had worked on back in the United States, these sites were immaculate. The fabric draped over the sites served two purposes: it prevented dust and debris from falling onto the streets, but it also prevented onlookers from seeing the demolition and rebuilding of their city. Each city had its own complex reasons for the rate of construction: population growth in Osaka, earthquake rebuilding in Kobe, and the replacement of traditional—but outdated—houses in Kyoto.

Stable Diffusion’s approach to color is more neutral or literal. Unless explicitly prompted, it will return images that are simply less stylized than Midjourney. That doesn’t mean that it isn’t possible to produce highly colorful or stylized images with it, but it tends towards more basic and ordinary imagery. You get what you ask for in Stable Diffusion, while Midjourney is your imagination with an added techi-color filter in high saturation, going beyond the literal interpretation of the prompt.

/imagine a photo of a building wrapped in blue tarps in Osaka in sunlight --seed 14796

!dream a photo of a building wrapped in blue tarps in Osaka in sunlight -W 1024 -H 1024 -S 1123249968

The most common color tarps I saw were blue and green with a few white or gray. Most were connected to metal scaffolding, but a few construction companies still used bamboo scaffolding for smaller renovations.

Both models are also highly sensitive to color words in the prompt. The introduction of the word “blue” radically changed both images. It is often difficult to contain the color, especially in Midjourney. When you prompt with a color, it takes you seriously, and the color will often bleed across the image into other objects.

/imagine a photo of a building loosely covered with white translucent tarps. Osaka street with overhead wires, uhd, 8k --seed 17125

!dream a photo of a building loosely covered with white translucent tarps. Osaka street with overhead wires, uhd, 8k -W 1024 -H 1024 -S 3299302058

I began taking photos of these fabric structures to document their diversity. Everything—from small repairs on a two-story house to skyscrapers—would be draped in billowing white, green, or blue fabrics. I took thousands of photos and distilled them into three hundred divided between the three cities.[6]

The models are trained on image-text pairs so certain words hold significant meaning, as they may have appeared frequently or are highly specific to a certain type of image or style. Most prompts use these keywords as a way to evoke a style, medium, or property without actually using these tools or mediums. Here, the two keywords “UHD” and “8K” are added, to have the AI attempt to make an image with higher levels of detail seen in ultra high definition and 8k images.

/imagine a photo of a Kyoto street with a building loosely covered with green, white, and blue translucent tarps. Overhead wires, uhd, 8k

!dream a photo of a Kyoto street with a building loosely covered with green, white, and blue translucent tarps. Overhead wires, uhd, 8k -W 1024 -H 1024 -S 3299302058

Once I began looking, I saw them everywhere. Like the change of seasons, the color of a neighborhood changed with the state of construction projects. One day an old Kyoto Machiya would be draped with green tarps, and six months later a three-story concrete apartment building would be unveiled. The workers would fold away the fabric tarps and truck them off to some other neighborhood's transformation.

The evocation of keywords is magical. The AI really doesn’t know what “8k” means on a technical level. It is limited to making 1024 × 1024 images, and doesn't have the ability to literally make 8192 × 8192 images. Instead, some of the images it was trained on included images that were probably 8k at one point, and then downsampled, but still retained a caption that included “8k”.

/imagine a photo from an Osaka street of a tall building draped in white translucent fabric. The fabric tarp blows freely in the strong wind. Photorealistic, architectural photography, uhd, 8k

!dream a photo from an Osaka street of a tall building draped in white translucent fabric. The fabric tarp blows freely in the strong wind. Photorealistic, architectural photography, uhd, 8k -W 1024 -H 1024 -S 3934460255

Osaka's veils were my favorite. While I was walking in Osaka one day, there was a strong gust of wind and the bottom of a 20-story white veil came loose from a tower. I sat and watched (with a dead camera battery) as the workers attempted to recapture the fabric as it floated over neighboring buildings.

Similar to the evocation of “8k”, the AI has no idea what “billowing” means—nor can it simulate the physics required to model fabric in the wind. It simply knows what “billowing” looks like and can apply that to the image. This mimicry comes naturally to artists but became, oddly, more difficult with the advent of digital modeling. We felt like we needed to accurately model everything using real (digital) forces. We needed particle systems, spring networks, and collision detection.

Faking it wasn’t enough despite the entire history of art proving that “faking it” was more than enough—or even better.

/imagine a photo flying above osaka city

!dream a photo flying above osaka city -W 1024 -H 1024

Unfortunately, I had to return to the United States when the fellowship was over. I began working at a firm, making models digitally and physically, creating renderings for competitions, and spending far too long in Photoshop making tedious edits to those renderings.

One of the biggest differences between Midjourny and Stable Diffusion (and many other models, like Dall-E), is the literalness of Stable Diffusion and similar models. Sometimes, this is really helpful, as you just want what you asked for. Midjourney always seems to take things a step past what you asked for, or simply doesn’t provide what you would expect. Although data scientists creating these models would see this as a failure of the model, for artists and designers, it presents opportunities to spur the imagination. Here we see two views over a city. The Stable Diffusion version gave us exactly what you would expect, but nothing more. The Midjourney version gave us a city, but not only is it at sunset, there appears to be thousands of other planes making light trails through the sky above.

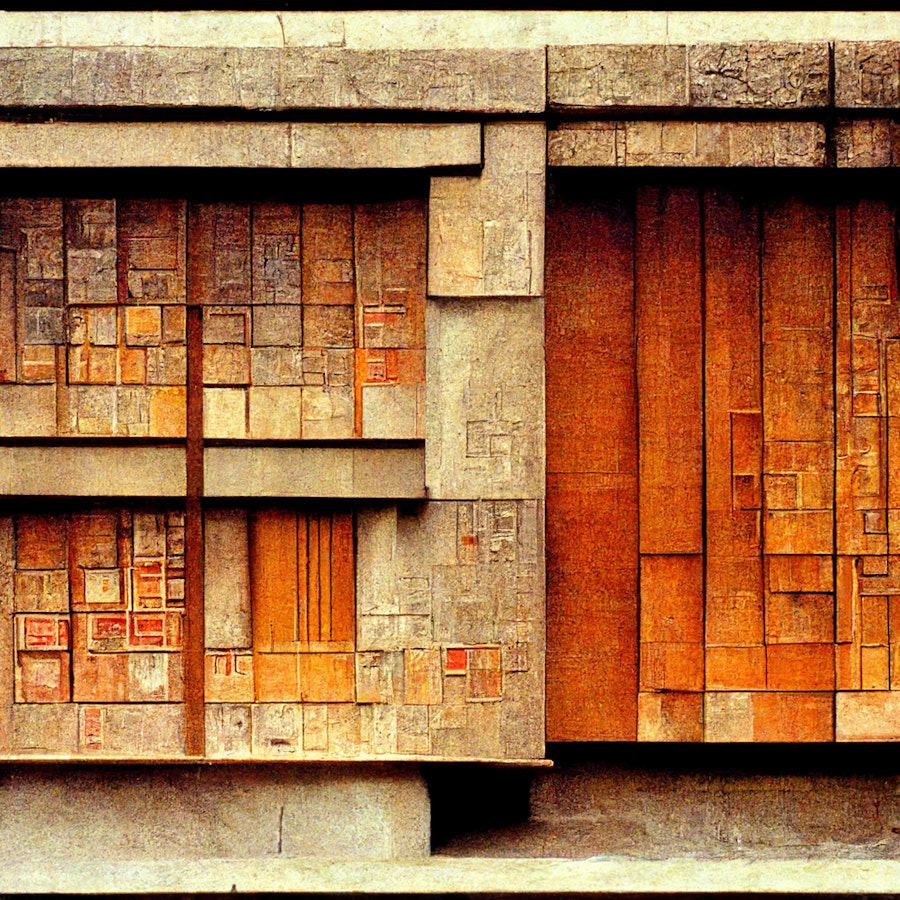

/imagine a photo of a sagging and folding facade of concrete. A facade by Miguel Fisac.

!dream a photo of a sagging and folding facade of concrete. A facade by Miguel Fisac. -W 1024 -H 1024 -S 2433451519

While in graduate school I discovered the work of Miguel Fisac, Heinz Isler, Frei Otto, Eladio Dieste, Felix Candela, Jean Prouvé, and a number of other architects, engineers, and designers who investigated the integral relationships between form, material, fabrication, and performance. While digitally simulating a fabric structure one day, I began to wonder if I could cast plaster into the fabric to produce a precast facade panel.

One might think that these aesthetic differences between the output of platforms are based on the training data. In reality, the original training data contained billions of images, and it's likely that most text-to-image AI platforms are using the same or very similar training data. Instead, the differences are related more to how that data has been encoded and weighted in the model. Two AI models could be trained on the same exact images, but based on how each model is programmed, their “perception” or "attention" could be quite different.

/imagine a photo of a sagging and folding facade of concrete

!dream a photo of a sagging and folding facade of concrete. -H 1024 -W 1024 -S 2433451519

The experiment failed miserably, I thought, because the cast didn’t look like the digital simulation. Instead, the fabric had expanded and wrinkled under the liquid slurry or plaster. Although disappointed, I tried several more times, for a few months, before finally growing to appreciate the sagging forms.

However, that is not to say that the training data is irrelevant. Even if every single image ever created by humans was used to train the model, there are simply more examples of certain images than others, and this natural imbalance creates significant biases in any model’s ability to understand a prompt. In these images, the previous generation’s prompts both invoked the name of Migual Fisac, a pioneer of flexible fabric formwork. Yet the removal of his name had almost no discernible effect on the image. In effect, both Midjourney and Stable Diffusion have no idea who “Miguel Fisac” is or what his name might mean in relation to concrete.

/imagine a photo of a sagging and folding facade of concrete. A facade by Frank Lloyd Wright.

!dream a photo of a sagging and folding facade of concrete. A facade by Frank Lloyd Wright. -W 1024 -H 1024 -S 2433451519

Instead of fighting the system to produce what I thought I wanted, I gave up some control to the bottom-up physical interactions between the elasticity of the fabric and the gravity and pressure loads of the liquid plaster slurry. I began to understand the more subtle and indirect forms of control I had over the parameters of the system, such as the design of the frame, the type of fabric, and the placement of constraints.[7]

If we were to replace “Miguel Fisac” with “Frank Lloyd Wright,” we immediately see a dramatic difference because both models have been trained on countless images of Wright’s work. One might call this an implicit bias, as it is not created by the explicit programming of either platform, but simply through the abundance of images of one architect’s work and the unfortunate lack of images of another. Certain words or phrases are so prevalent (and thus powerful) that they negate almost any other feature of the prompt.

/imagine a photo of a sagging and folding facade of concrete. The curvaceous protuberances and bulging growths of concrete look inflated like balloons. The texture is of woven fabric.

!dream a photo of a sagging and folding facade of concrete. The curvaceous protuberances and bulging growths of concrete look inflated like balloons. The texture is of woven fabric. -H 1024 -W 1024 -S 3606026364

Through many iterations, I began to understand the value of working with a bottom-up system. I could nudge it in one direction or another, and it was able to produce forms that were beyond my own ability to model them. I had originally been drawn to computational design as a way to gain more control over my designs, but slowly realized that what I was most attracted to was not control, but emergence. I wanted to collaborate with other systems that could augment,compliment, and challenge my own abilities. [8]

This presents a clear problem, but one that we already encounter without AI. Popularity bias is common in all aspects of our life. Amazon, Spotify, and Netflix inundate us with recommendations based on what is popular. And we often heed those recommendations because we assume they must be good since they are popular. This feedback loop marginalizes content that is just as worthy of our attention (or more).

These popularity biases (as well as many more insidious biases) are mirrored by AIs. Trained on an approximation of the sum total of human imagery, popular terms are reinforced in the model and less popular terms struggle to be noticed.

/imagine a photo of a sagging and folding facade of concrete. The curvaceous protuberances and bulging growths of concrete look inflated like balloons. The texture is of woven fabric. Trending on ArtStation. Award winning building.

!dream a photo of a sagging and folding facade of concrete. The curvaceous protuberances and bulging growths of concrete look inflated like balloons. The texture is of woven fabric. Trending on ArtStation. Award winning building. -H 1024 -W 1024 -S 3606026364

I began to scale up my experimentations with fabric formwork. They grew from small 6” × 6” blocks, eventually to 24” × 60” facade panels[9]. My work was gaining more exposure and clients were calling. As the size and complexity of the panels and walls grew, I struggled to physically fabricate them in my studio. Popularity created pressures to standardize, professionalize, and productize the bottom-up process.

Ironically, popularity itself is a type of prompt-craft hack. Prompt-crafting as a term recently coined, within AI text-to-image Discord groups, for the act of writing good prompts. There are prompt-crafting Discord channels where one can go to ask for help in the construction of a good prompt. Similar to copy-editing, the order and selection of words can have a meaningful and dramatic effect on the way the text is understood. One of the most popular prompts used is the phrase “trending on ArtStation,” a reference to ArtStation.com, a leading platform for amature and professional visualization artists. Images upvoted by visitors are featured on the site, thereby getting more upvotes through simple positive feedback loops (aka “trending”). AI artists caught on that since the models were trained on image-text pairs, adding the phrase “trending on ArtStation” would essentially pull some mystical essence from the tens of thousands of images that have actually trended on ArtStation and imbue their AI images with a higher quality. Other prompt-hacks include “competition-winning proposal,” award-winning building,” and “trending on ArchDaily.” Popularity by association.

/imagine a photo of a sagging and folding facade of concrete modular panels. The curvaceous protuberances and bulging growths of concrete look inflated like balloons. The texture is of woven fabric.

!dream a photo of a sagging and folding facade of concrete modular panels. The curvaceous protuberances and bulging growths of concrete look inflated like balloons. The texture is of woven fabric. -H 1024 -W 1024 -S 3606026364

After seven years of exploring flexible formwork, I was ready to move on.

Not unexpectedly, dozens of prompt generators[10] have sprung up to help budding artists write their prompts based on analyses of images produced by each AI model. You enter what you want, and these services help you standardize your prompt to add key phrases that are known to work. Looking to produce an architectural image? Have you tried adding “Zaha Hadid” to your prompt? How about “Frank Gehry”? How about “competition-winning proposal by Zaha Hadid”? That’s sure to produce a fantastic image. Ask for something that is popular and expect to receive a popular (but predictable) response. But what’s the point?

/imagine a photo of a long series of stacked plaster animal balloons. The plaster balloons are all white and form a wall from floor to ceiling. Ultra wide angle, 8k texture

!dream a photo of a long series of stacked plaster animal balloons. The plaster balloons are all white and form a wall from floor to ceiling. Ultra wide angle, 8k texture -H 1024 -W 1024 -S 3862497800

Five years passed before I attempted any flexible formwork projects again, while I focused on other ideas and techniques that had captured my attention. What brought me back was a series of workshops I did with students. After years of developing my own method for flexible formwork, these students questioned everything, and in doing so reawakened my imagination.

It’s healthier for your own creative exploration as well as the collective evolution of the model to avoid popular references. If you are having trouble with a specific prompt, make a radical change. Describe the idea in a completely different way. Try different seeds in Stable Diffusion. Add more Chaos in Midjourney using the “--c” parameter to force the model to show more diverse results that have drifted from your original prompt.

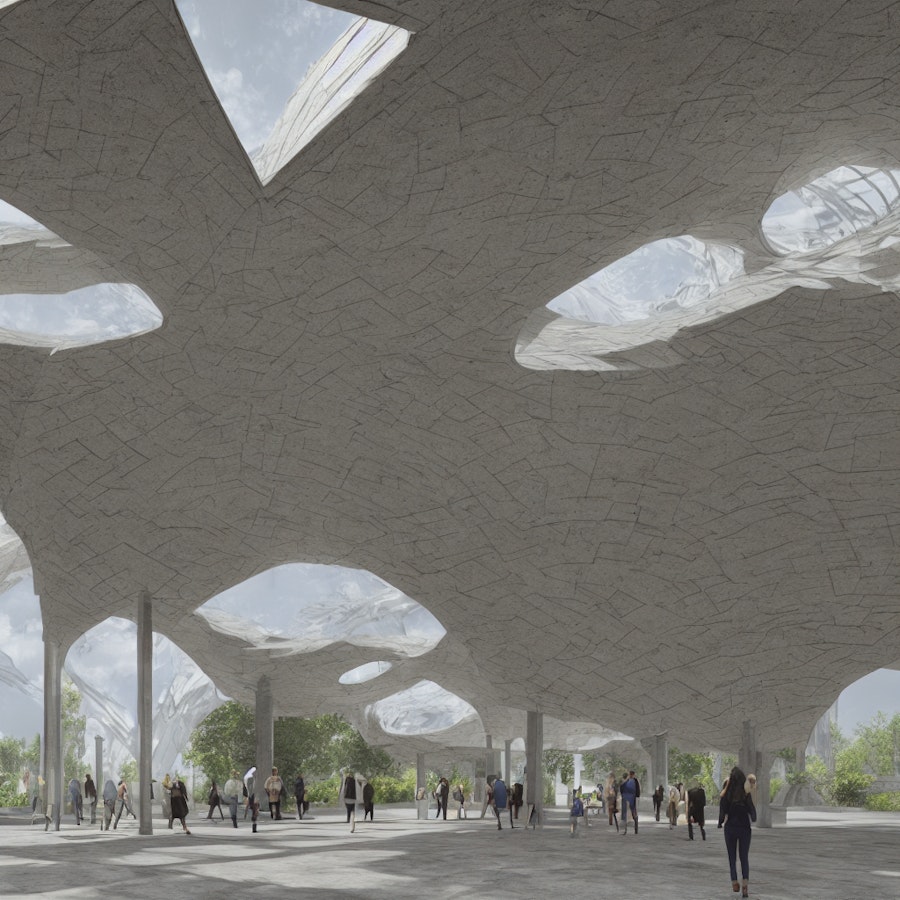

CLIP interpretation of a rendering by Andrew Kudless of Confluence Park: “a group of people standing under a white structure, a digital rendering by Keos Masons, featured on behance, environmental art, vray, made of insects, biomorphic”

CLIP interpretation of a photo by Casey Dunn of Confluence Park: “a group of people walking around a cement structure, a digital rendering by Tadao Ando, featured on cg society, concrete art, vray, biomorphic, rendered in cinema4d”

During this time, I also had the opportunity to explore a totally different approach to fabric and concrete. In 2014, I joined Lake|Flato on an RFP for Confluence Park in San Antonio, Texas[11]. The project’s objective was to create an iconic pavilion for an underdeveloped neighborhood that celebrated the role of water in the local ecosystem.

Let’s take a step back and discuss the underlying model and what’s happening under the hood. So far we have been showing text prompts generating images. We have found that some words or phrases like “frank lloyd wright” have more influence on an image’s generation than “miguel fisac.” There is an important distinction between image searching on Google and generating an image with one of these AI models. While the models have been trained on billions of image-caption pairs scraped from the internet, the models do not access any of these images after the initial training. The training teaches the generative model how to make images out of noise, but it still needs a way to guide that denoising process, based on the user’s prompt.

This is where CLIP[12] (Contrastive Language–Image Pre-training) comes in. One of the problems earlier AI researchers had with image generation with machine learning was that training sets were manually created within highly specific domains (e.g. only images of cats, faces, or the works of Zaha Hadid Architects).

This made the generative models very good at constructing realistic representations of each specific area it was trained on, but unable to combine concepts in novel ways. That is, unless the training set contained images of Zaha Hadid buildings that looked like cats, it would struggle to generate these.

CLIP, developed by openAI, is a natural language processor that is better able to learn the relationships between images and text captions. The first part of the model learns how to denoise an image while the second part, CLIP, guides the denoising towards the user’s prompt.

Since CLIP is a translator between text and images, it can be used in both directions. Midjourney, Stable Diffusion, and all of the other text-to-image generators have been using CLIP to produce images. But it can also be reversed to create prompts for pre-existing images. Using CLIP[13], prompts can be created for the two images shown above, of Confluence Park. Or maybe “prompt” isn’t the right word—as these are not generating the images. Instead, we are looking at what CLIP “thinks” these images contain, or the phrases that best describe the images based on its training. Instead of AI-images from human-generated texts, CLIP gives us AI-generated captions from human (or AI) generated images.

/imagine a group of people standing under a white structure, a digital rendering by Keos Masons, featured on behance, environmental art, vray, made of insects, biomorphic

!dream a group of people walking around a cement structure, a digital rendering by Tadao Ando, featured on cg society, concrete art, vray, biomorphic, rendered in cinema4d -H 1024 -W 1024 -S 4053388286

After months of concept development, we arrived at a biomimetic solution inspired by the double curvature of regional plant species, a form which collects dew and rainwater and redirects it to their rootstem. The pavilion roof would become a giant water collection system while also creating the necessary shade for the thousands of visitors it receives every year.

Personally, I think this is one of the most interesting and least discussed aspects of the recent explosion in AI-generated images. By using CLIP, we are able to deconstruct the mind’s eye of the generative model. In other words, it is more clear now why certain prompts seem to have no effect on the image generation, while other words have large impacts. In a way, we are reading the mind of the AI model. If I wanted an image that looks like Confluence Park, I could spend hours crafting the perfect text description of what I think Confluence Park is, or I could simply ask the AI model what it sees when it looks at an image of Confluence Park.

To bring this full circle, these AI-generated descriptions of images can then be used to regenerate the images. I have no idea who Keos Masons is[14], but they certainly had nothing to do with Confluence Park.

Likewise, I love the idea of Tadao Ando toiling away in Cinema 4D creating renderings for me, but that unfortunately didn’t happen either.

CLIP doesn’t know anything about Confluence Park, but according to these generated descriptions, it is a biomorphic concrete structure that facilitates the gathering of people. Not too far off! Although it got the literal references to people and tools incorrect (no Cinema 4D or VRay were used in the project), the general gist of the description is correct (although I might quibble on the distinction between biomimicry and biomorphic).

/imagine a group of people standing under a white concrete structure, featured on behance, environmental art, vray, made of insects, biomorphic –seed 15226

!dream a group of people walking under a cement structure, featured on cg society, concrete art, vray, biomorphic, rendered in cinema 4d -H 1024 -W 1024 -S 2102355665

To keep costs low, we knew that the structure needed to be modular. I began parametrically exploring various plan grid tessellations that would minimize the number of units and standardize the construction process. Although forming the concrete similar to Felix Candela’s projects would have been ideal, the cost of labor was too high for a board-formed hyperbolic paraboloid structure. We needed a simpler and more efficient approach.

The CLIP descriptions give us a foundation to build a new prompt that Midjourney or Stable Diffusion might understand. If I begin to remove some of the obvious mistakes (sorry Tadao Ando, your time moonlighting as an archviz guru is over), I may get closer to how a description that I would understand, but it may also remove important keywords that the AI understands with a level of meaning that I don’t. Here, small corrections are made to help guide the images towards what I hope will be better representations.

/imagine a concrete pavilion with a group of people walking under the roof, featured on behance, environmental art, vray, made of insects, biomorphic –seed 15226

!dream a concrete pavilion with a group of people walking under the roof, featured on cg society, concrete art, vray, biomorphic, rendered in cinema 4d -H 1024 -W 1024 -S 4136722620

We began to explore alternative fabrication strategies that were modular, accurate, and innovative. I had worked with Kreysler and Associates in a few academic studios and was impressed with their ability to translate digital geometry into highly refined forms using robotically milled molds to produce fiberglass forms.

Stable Diffusion seemed to be stuck producing aerial views of the prompt. After dozens of failed attempts adding phrases like “eye-level photo” or “seen from below” to the prompt, a simple switching of the first phrase’s word order accomplished the desired effect of bringing the camera to the ground level. Although to a human reader, the differences between “a group of people walking under a cement structure” and “a concrete pavilion with a group of people walking under the roof” is negligible, these represented a significant semantic difference to the AI model.

Interestingly, Midjourney didn’t have any of the same struggles with the camera location or direction. But the reordering of the first phrase also helped refocus the attention on the structure rather than the people.

/imagine a concrete pavilion with a group of people under the vaulting roof, featured on behance, environmental art, vray, biomorphic –seed 15226

!dream a concrete pavilion with a group of people walking under the vaulting roof, columns becoming roof, featured on cg society, concrete art, vray, biomorphic, rendered in cinema 4d -H 1024 -W 1024 -S 644791012

Kreysler fabricated three modular molds out of a composite of fiberglass sheets, resin, and a 2” thick balsa wood core. These incredible molds were positioned on site at an angle that allowed the concrete to not require a top-mold: essentially curved tilt-up concrete panels. This further reduced the cost, as only one side of the formwork was required. This also meant that the texture of the concrete was smooth on the interior, as it was cast against the fiberglass molds, while the exterior of the concrete vaults were deeply textured with a broom finish.

Removing the “made by insects” for Midjourney helped to diminish the nest-like qualities of the previous generation. However, the concrete still appears highly textured. With Stable Diffusion, I am attempting to add a structural fluidity between the (nearly absent) columns from the previous generation and the roof. By adding “columns becoming roof” we get more columns, but they still don’t feel like an integral part of the vaulting structure.

/imagine A photo inside a biomorphic concrete pavilion with a group of people under a smooth vaulting roof, featured on behance, environmental art, vray, volumetric lighting, bright sun light, wide-angle lens, warm tones, tall dry grasses and live oak trees behind the pavilion --seed 15226

!dream A photo inside a biomorphic concrete pavilion with a group of people walking under the vaulting roof, columns becoming roof, featured on cg society, concrete art, vray, organic architecture, volumetric lighting, bright sun light, 8k, hq, tall dry grasses and live oak trees behind the pavilion, rendered in cinema 4d -H 1024 -W 1024 -S 644791012

The project opened to the public in 2018 and won the AIA National Honor Award in 2019. As my first built project of this scale, I am looking forward to exploring the integration of form, fabrication, and performance at even larger projects. What began as a small experimental pavilion will hopefully inspire new projects in my practice and teaching, investigating innovative ways of designing and building with nature and technology.

Further refinement to the order of words, synonyms, and additional phrases that evoke the desired lighting, coloring, and textures further help push the images towards the desired direction. Unfortunately, I am still struggling with Stable Diffusion to produce images that are as close to my vision as Midjourney. As an open-source project that can run on local machines for free, I think Stable Diffusion has an excellent foundation. The development in just two months has been phenomenal, but I’m finding it harder to push the images where I want them to go. There is a stubbornness to the model, and also the interface. While Midjourney almost begs the user to iterate on each generation, Stable Diffusion presents the generated image as a finished product.. At the pace of development, this functionality will probably arrive before this article is published.

Things are moving so fast that I’ve stopped being surprised by the new improvements and features that are added daily across the image generation space.

/imagine rolling hills, tall grasses, mesquite trees, cedar elm trees, live oaks, texas hill country, landscape photography

!dream rolling hills, tall grasses, mesquite trees, cedar elm trees, live oaks, texas hill country, landscape photography -H 1024 -W 1024 -S 145626783

After moving to Texas from California in 2020, I began spending more time outside but also seeking shelter from the harsh sun. A visit to Dripping Springs, Texas renewed my 20-year old fascination with using fabric as an exterior layer of structure. The landscape of rolling hills, tall grasses, and endless fields of mesquite and cedar elms inspired me to design a home that created micro-climates of shade and shelter between the landscape and home.

Both Midjourney and Stable Diffusion excel at landscape photography. The simulation of natural forms such as plants and trees, an ever-present struggle with digital rendering, is easy for AI image generation platforms. I can only imagine that this is due to the abundance of photos of landscapes in the original training data compared to photos of structures like Confluence Park or Miguel Fisac’s oeuvre.

See detailed photo above of the faux signature.

Although the geometric complexity of a tree far exceeds that of a concrete facade or shell structure, these AI models don’t really care about geometric complexity. The raw material of the images are pixels, not vertices, and it is far easier to reconstruct a tree from noise than it is to 3D model one.

But look at the lower left of the Stable Diffusion image closely. There is a subtle signature or watermark on the generated image. Incredibly, the AI models are so attentive to the details of images, that they try to reconstruct common features of landscape photos: the photographer’s signature. Here, we get into interesting and controversial legal territory on the nature of authorship and ownership.

/imagine a minimalist glass house, rolling hills, tall grasses, mesquite trees, cedar elm trees, live oaks, texas hill country, landscape photography

!dream a minimalist glass house, rolling hills, tall grasses, mesquite trees, cedar elm trees, live oaks, texas hill country, landscape photography -H 1024 -W 1024 -S 3811618402

Let’s first situate a simple, contemporary house in the landscape. The glass house is striking in contrast to the landscape, but it acts as the landscape’s foil. You are not meant to experience the landscape from the modernist house, you are meant to look at it, as you would art on your wall. You are removed from the temperature, wind, sounds, and smells. Instead you are sealed within an air-conditioned box that could have been dropped anywhere and would aesthetically succeed, as its success rests on separation and contrast.

Doubtlessly, thousands of artists and photographers did not willingly provide their images to be used in the LAION-5B training data. The models were trained on images scraped (some might say stolen) from the internet.[15] The models learned the features of these images and how to convert pure noise to something that passes as an artist’s signature. But, to be clear, this isn’t a specific signature of any artist, just like these trees are not representations of any specific tree that has ever been photographed. Similarly to how my brain has been trained on a lifetime of seeing trees and signatures, I could attempt to draw them from memory. However, they would never pass as the original because that's not how memories work—for the average person or AI. After the actual or virtual neural nets are trained, the original data is thrown away. There is no “photographic memory.” We— and the AIs— literally just have low-resolution mental models of “tree” and “signature” that are amalgams of every tree or signature we have ever seen. These models are constantly being refined and reinforced by every new tree or signature we see.[16]

/imagine a minimalist glass house, metal construction scaffolding surrounds the house, rolling hills, tall grasses, mesquite trees, cedar elm trees, live oaks, texas hill country, landscape photography

!dream a minimalist glass house, metal construction scaffolding surrounds the house, rolling hills, tall grasses, mesquite trees, cedar elm trees, live oaks, texas hill country, landscape photography -H 1024 -W 1024 -S 3811618402

A goal of the design of the Dripping Springs House is to diffuse the barrier between inside and outside through a lightweight lattice structure and a dematerialization of the facade. Instead of large plates of glass, the facade is composed of layers of thin columns and translucent panels. The dividing line between inside and outside is ambiguous and blurry. Trees and plants cross the vague border; columns clump and spread like trunks in a forest. But beyond a description of the desired architectural properties, the design process itself also exists in an indistinct latent space of infinite possibilities.

A signature feature of AI images has been an expected level of aesthetic ambiguity. In 2018, the artist collective Obvious created a series of generative images using generative adversarial networks (or GANs— a precursor to today’s Latent Diffusion Models), called Edmond de Belamy.[17] The blurry portraits of a vaguely-male figure were generated from a model trained on 15,000 portraits in the WikiArt archive.[18] The same year, the artist Mario Klingemann (aka Quasimondo) created Memories of Passersby with similarly blurred and slightly grotesque portraits.[19] Noses, eyes, and mouths are misshapen and slightly out of place. Often there are three eyes or multiple mouths. These early AI models struggled to compose realistic faces.

With the introduction of Latent Diffusion Models, AIs are able to generate much more realistic representations of faces— or almost anything. AI models' FID (Fréchet Inception Distance) scores are rapidly dropping. FID is the statistical “distance” between actual images and generated images. If a computer can be tricked into thinking that a generated image is actually a real image, the FID score for that model decreases. As FID scores have decreased, so has the ambiguity of the synthetic images. Although I see the drive towards realism as natural, I wonder if it misses the value of images to offer ambiguous interpretations. If a computer can generate an image that is indiscernible from the real, the model is seen as the most advanced. Yet in the creative process, we often avoid realistic renderings, as they are far too resolved and don’t offer the creative interpretations of the simple sketch.

The “mistakes” of AI models are the biggest value to us as designers, as they present us with something unexpected.

/imagine a minimalist glass house, metal construction scaffolding surrounds the house, rolling hills, tall grasses, mesquite trees, cedar elm trees, live oaks, texas hill country, landscape photography (--stylize values from 625 - 60,000)

!dream a minimalist glass house, metal construction scaffolding surrounds the house, rolling hills, tall grasses, mesquite trees, cedar elm trees, live oaks, texas hill country, landscape photography -H 1024 -W 1024 -S 3811618402 (-C values from 0 - 20)

For several generations of architects, we have become accustomed to the idea of designing with infinity. Mario Carpo describes Deleuze and Cache’s concept, in the 1990’s, of the “objectile” as “not an object but an algorithm – a parametric function which may determine an infinite variety of objects, all different (one for each set of parameters) yet all similar (as the underlying function is the same for all).[20] Even before the wave of parametric design, architects were often designing an algorithm, manually enacting it, and tweaking it with various site, programmatic, or material parameters to produce new projects. Through either analog or digital methods, the job of the designer has been to both construct a process (or machine) for exploring design options, and direct it towards the desired outcomes. What has changed in the last thirty years, first through parametric modeling and now AI-generated images (and soon 3D models), is both the speed of exploration and the intentionality of the designer.

The programmers of both Midjourney and Stable Diffusion haved exposed certain parameters that control some of this ambiguity. Although these parameters operate differently, they have been described with similar terms. The “Cfg Scale” in Stable Diffusion “adjusts how much the image will be like your prompt. Higher values keep your image closer to your prompt.”[21] The “Stylize” parameter in Midjourney “sets how strong of a 'stylization' your images have, the higher you set it, the more opinionated it will be.”[22] Although different, both have a similar effect in telling the AI to ignore your prompt to some degree. With both settings, there is a default middle ground that allows the model to drift enough from the prompt to still present novel results. By setting the CFG Scale in Stable Diffusion to something very low (1-5) it produces less coherent images that have ignored much of the prompt. In Midjourney, a Stylize setting of 10,000 and above produces images that have vague connections to the prompt, but have either drifted far or concentrated on only one part of it. In addition, the images become less realistic and more painterly. The progression from ambiguity to literalness is clearer in Stable Diffusion, as the constant seed allows us to isolate the effects of parameter changes.

/imagine a minimalist glass house, metal construction scaffolding surrounds the house, rolling hills, tall grasses, mesquite trees, cedar elm trees, live oaks, texas hill country, landscape photography (Midjourney v3 model)

/imagine a minimalist glass house, metal construction scaffolding surrounds the house, rolling hills, tall grasses, mesquite trees, cedar elm trees, live oaks, texas hill country, landscape photography (Midjourney v3 model images remastered with v4 model)

When designs needed to be manually drawn, the designer could only produce a limited number of options. The designer needed to be very intentional with the selection of these limited options, as there wasn’t time to explore a broader range of options. We have developed the opposite problem with computational design: with an infinite range of options available and the tools to nearly instantly model, draw, and visualize them, we drown in the flood of information. Mark Burry has described this as the “stopping problem.”[23] How does a designer know when to stop when they have built or simply use a process that is able to produce an infinite number of options? Interestingly, Burry suggests that the answer circles back towards intentionality: with the careful construction of constraints, and the intelligent use of their design judgment (and maybe even some computational optimization), the designer can steer the designs towards their goal. The designer is a co-author in the process through their initial constraints and eventual judgment and selection.

One of the most exciting developments this summer has been the emergence of multiple models. While early on Google and OpenAI’s models received much of the attention, smaller and self-funded companies such as Midjourney and Stability.AI entered the scene and offered alternative workflows and models. In particular, Stability.AI is an open-source company, and provides its code on github for all to download and modify for free. According to Emad Mostaque, “if we make all of that open [the training data, the model, and the model weights] then it is like infrastructure for the mind for everyone going forward. And there are other places we can make money by adding value and everyone else will be forced to go open as well.”[24] Meanwhile, Midjourney’s model is not open-source, however they have begun to experiment with using Stable Diffusion’s model in the beta of their version 4 model. This has created an interesting situation that to the user feels a bit like landing in a country where you don’t speak the local language. Everything that previously worked in Midjourney no longer works when the underlying model changes. Although the interface looks the same, the “mind” has radically changed and no longer speaks the same language. I fully support the movement towards open-source models, however, we are entering a realm where we might need to learn many dialects in order to communicate with a variety of machines.

As the number of models explodes and diversifies, we will have an equal explosion in the skills of designer-translators who are able to communicate with these new models in ways that are productive.

/imagine a minimalist glass house with a family, metal construction scaffolding surrounds the house, rolling hills, tall grasses, mesquite trees, cedar elm trees, live oaks, texas hill country, landscape photography

!dream a minimalist glass house with a family, metal construction scaffolding surrounds the house, rolling hills, tall grasses, mesquite trees, cedar elm trees, live oaks, texas hill country, landscape photography -H 1024 -W 1024 -S 3811618402

We need a new understanding of design that acknowledges the value of emergence in a system that is co-authoring with us. If evolution is defined by the process of natural selection, designing in partnership with machines is more similar to the process of unnatural selection: the breeding of crops or animals for certain traits. Only some of the parameters are fully controlled in a top-down manner, and much is left up to the bottom-up interactions of bits and atoms. Our task as designers is to use our experience and knowledge to direct the design towards our goals, while being open and receptive to novel properties that we did not expect. First with parametric design and now with AI-assisted design tools, the designer has gained ways to both explore their imagination more deeply, and maybe more importantly, to find ways to expand beyond it.

It is critical to realize, though, that each of these models will come with their own biases. As already discussed, there is the issue of “popularity bias” learned through the quantity (or lack thereof) of image-text pairs on particular topics in the training datasets. If each model has their own dialect, it may be easier to communicate something in one model than another. And most certainly there will be large gaps of understanding that are shared by all models.

When working with AI-generated images from text prompts, we will inevitably encounter biases. As mentioned earlier, AI models are mirrors of culture and will reflect the biases that pervade it. Some might argue to avoid the use of AI models due to the biases, but to me that is like avoiding a mirror because you don’t want to see your face. Are AI models biased? Absolutely. But this provides us an opportunity to more deeply engage, investigate, and confront the prejudices and predispositions not only in AI but throughout culture.

When discussing biases and AI, it is important to try to understand the locus of bias: is it an artifact of the training data, the programming, or of your own prompt? For example, in the prompts above, I asked for a “house with a family” yet no family materialized in the images. Why has the model ignored the request?

/imagine a minimalist glass house with a family. A crowd of people in the foreground. Metal construction scaffolding surrounds the house, rolling hills, tall grasses, mesquite trees, cedar elm trees, live oaks, texas hill country, landscape photography.

!dream a minimalist glass house with a family. A crowd of people in the foreground. Metal construction scaffolding surrounds the house, rolling hills, tall grasses, mesquite trees, cedar elm trees, live oaks, texas hill country, landscape photography -H 1024 -W 1024 -S 3811618402

In this infinite landscape of choice and co-authored imagination, how do we begin to decide which direction to travel? In other words, if parametric design—and now machine-learning—have expanded the options and the speed at which they can be generated, how do we develop a design intuition that allows us to operate as designers? How do we learn how to set up the proper design constraints and make appropriate judgements? Fortunately, this has always been the purpose of design education: the development of critical thinking that enables a designer to organize a project’s objectives and constraints into a set of instructions that will ideally result in a structure that performs well across a range of technological, programmatic, and cultural criteria. While some have argued since the dawn of computation in architecture that the computer is doing the designing instead of the human, this argument continues to fail with each new technology. These technologies are all tools that should enable the designer to make better decisions as they allow faster iterations.

The first option is that the training data is biased against the simultaneous generation of architecture and people. Although this sounds far-fetched, the history of architectural photography is distinctly biased against the human figure. Originally, the long shutter times and larger apertures needed to capture buildings often negated the human figure as it would be rendered on the film as a moving blur. Over time, an expected aesthetic of architectural photography developed: that it would focus on the built form and not on the life of its inhabitants. Until recently, both architects and photographers avoided photos that contained people. If these AI models were trained on images of buildings, there is a good chance that the models simply don’t understand how to compose images with both buildings and people. The addition of “a crowd of people in the foreground” has done nothing to generate more humans in the images.

/imagine A family standing in front of a minimalist glass house. The house is wrapped in billowing white sheer tarps. Metal construction scaffolding surrounds the house, rolling hills, tall grasses, mesquite trees, cedar elm trees, live oaks, texas hill country, landscape photography

!dream A family standing in front of a minimalist glass house. The house is wrapped in billowing white sheer tarps. Metal construction scaffolding surrounds the house, rolling hills, tall grasses, mesquite trees, cedar elm trees, live oaks, texas hill country, landscape photography -H 1024 -W 1024 -S 3811618402

A major caveat to this argument is that although software should be enabling, the initial learning curve is frustrating and intimidating. It can take weeks or months before a designer reaches a level of facility with an application where they feel it can actually help them explore a design. Over my nearly twenty years as a design instructor, I have witnessed the learning curve get steeper and steeper with a corollary with students’ frustration with technology. Although the objective of design technologies is to make the exploration of design easier and more sophisticated, it can now take many months or years before a young designer feels comfortable enough with the platform to actually design with it. We have inadvertently created a generation of designers that often do not have the analog tools (sketching and physical model-making) to quickly explore a design space while also not yet having the necessary facility in digital tools.

If students don’t have the skills to quickly sketch an idea nor the ability to develop parametric variations of it through digital means, they are often stuck in a valley of frustration populated with error messages, arcane file formats, and abrupt crashes.

The second option is that bias has been introduced into the system through decisions made by programmers as they constructed and refined the model. For example, both Midjourney and Stable Diffusion seem to be programmed to “listen” more to the beginning of a prompt than the end. Although a human reader would understand “a house with a family in front” and “a family in front of a house” as nearly identical in meaning, word order seems to have more significance with AI models. Repetition, direct syntax, and simple grammatical structures are preferable when trying to communicate with the AI models. With time, the programming will advance and allow for more complex and nuanced phrasing, but for now, this presents a bias inherent in the system. When the generated image does not match your expectations, it may be due to the training data, but it could also simply be that the programming of the model simply doesn’t understand the nuances of your prompt. Revise the sentence and word order, swap out synonyms, and break long complex phrases into bite-size chunks. Through this rewriting of the prompt, you not only learn more about the mental models of the AI, but you begin to develop a deeper understanding of your own priorities and objectives.

/imagine A minimalist glass house covered in billowing draped white translucent tarps on scaffolding. Texas Hill country, rolling hills, cedar elm trees, live oak trees, mesquite trees, tall dry grasses. Color Grading, Editorial Photography, DSLR, 16k, Ultra-HD, Super-Resolution, Exterior wide-angle, Natural Lighting, Global Illumination

!dream A minimalist glass house covered in billowing draped white translucent tarps on scaffolding. Texas Hill country, rolling hills, cedar elm trees, live oak trees, mesquite trees, tall dry grasses. Color Grading, Editorial Photography, DSLR, 16k, Ultra-HD, Super-Resolution, Exterior wide-angle, Natural Lighting, Global Illumination -H 1024 -W 1024 -S 3245297886

I see AI text-to-image tools as one of many solutions to this problem. I have likened AI-generated images to sketches for several reasons, but maybe most importantly is their ease. Although I have compared them above to parametric modeling in that they are able to produce endless options, in operation, they function completely differently, offering the designer an intuitive and non-technical path of design exploration that has a very shallow learning curve. Like a sketch, there is an immediacy between idea and visualization. And the fluid co-authoring of the sketch with the AI allows the young designer to rethink assumptions and expand beyond what they originally conceived.

In the 1977 essay “The Necessity for Drawing: Tangible Speculation” by Michael Graves, he describes the act of co-authoring a drawing with a colleague during a boring faculty meeting:

“The game was on: the pad was passed back and forth, and soon the drawing took on a life of its own, each mark setting up implications for the next. The conversation through drawing relied on a set of principles or conventions commonly held but never made explicit: suggestions of order, distinctions between passage and rest, completion and completion. We were careful to make each gesture fragmentary in order to keep the game open to further elaboration. The scale of the drawing was ambiguous, allowing it to read as a room, a building, or a town plan.”[25]

The third type of bias that I’ve encountered is my own and is usually the hardest to perceive as we are our own blind spot. We simply don't know what we don’t know. After decades of experience within art, design, and architecture, I only know a small fraction of the technologies, styles, and individuals that form the collective history of the disciplines as understood by the AI models. When I read the prompts of colleagues, I’m constantly confronted with my own ignorance. From photography techniques to architectural references, I’m amazed at the breadth of what I don’t know.

This presents both a problem and an opportunity for the user. The history of our disciples are embedded in the prompts we write. The invocation of “brutalist”, “DSLR”, or “Paul Rudolph” in a prompt can radically change the content, composition, and aesthetics of the generated image. Words have power in completely new ways. The use or misuse of a word can make an image veer off in a new direction and we must take this as a challenge to better understand our references.

/imagine A minimalist glass house::1 The house is covered in billowing draped white translucent tarps on scaffolding::3 Texas Hill country, rolling hills, cedar elm trees, live oak trees mesquite trees, tall dry grasses::1.5 Color Grading, Editorial Photography, DSLR, 16k, Ultra-HD, Super-Resolution, Exterior wide-angle, Natural Lighting, Global Illumination::1

!dream A minimalist glass house. The house is covered in draped white translucent tarps on scaffolding. Hanging fabric mesh tarps. Texas Hill country, rolling hills, cedar elm trees, live oak trees, mesquite trees, tall dry grasses. Color Grading, Editorial Photography, DSLR, 2.5D, 16k, Ultra-HD, Super-Resolution, Exterior wide-angle, Natural Lighting, Global Illumination -H 1024 -W 1024 -S 25853655

Although Michael Graves would most probably disagree, his description of creating a collaborative drawing with another human mirrors my own experience co-authoring an image with an AI. Unlike learning any of the myriad design softwares that are expected of the beginning designer, using AI tools is accessible and this is a feature, not a bug. I have heard criticism from colleagues that the use of AI in design is both too easy and even harmful as the student will not learn the hard task of actually designing. My view is that we need easier tools for design that enable creative exploration and help to develop a designer’s intuition. This is not to negate the value of a rigorous and technical education in a range of other techniques, but instead a validation of the importance of sketching in all of its playful ambiguity. Beyond the specifics of how AI is used while exploring a specific project, I think its greatest value is in pushing the designer to write about their desires, visually evaluate the results, and iterate. This iterative process between words, images, and thoughts will help the designer build experience and intuition that is valuable on its own.

As prompts get longer, it is more difficult for the AI model to parse the importance of various sections of the prompt. The use of punctuation and repetition can help, although the introduction of text weighting has greatly helped my own process. Within written or verbal communication we use emphasis techniques such as bold fonts or changes in pitch or volume to focus the reader or listener’s attention. Multi-prompts with weighting does the same thing for the AI model in Midjourney. In the Midjourney prompt above, there are four primary sections that are separated by two colons followed by a number. The number is the relative importance of that concept compared to the others:

A minimalist glass house::1 (15%)

The house is covered in billowing draped white translucent tarps on scaffolding:3 (46%)

Texas Hill country, rolling hills, cedar elm trees, live oak trees mesquite trees, tall dry grasses::1.5 (23%)

Color Grading, Editorial Photography, DSLR, 16k, Ultra-HD, Super-Resolution, Exterior wide-angle, Natural Lighting, Global Illumination::1 (15%)

By using these multi-prompts with weighting, I can quickly fine-tune the importance of each section. Without the weighting, the AI doesn’t know that I really want the central concept of the image to focus on the draped fabric over the scaffolding. Unfortunately, Stable Diffusion doesn’t yet have multi-prompts with weighting, so I need to find ways to repeat the concepts that are most important to me in a variety of ways.

/imagine A minimalist glass house::1 The house is covered in billowing draped white translucent tarps on scaffolding::3 Texas Hill country, rolling hills, cedar elm trees, live oak trees mesquite trees, tall dry grasses::1.5 Color Grading, Editorial Photography, DSLR, 16k, Ultra-HD, Super-Resolution, Exterior wide-angle, Natural Lighting, Global Illumination::1 (v3 remastered in v4)

Dall-E edit 1: Generate: Branches of a live oak tree Dall-E edit 2: Generate: A large crowd of people on a grass lawn

Over the last few months, I have produced over 16,000 images just with Midjourney (and probably a few thousand more with Stable Diffusion). With each image generation, I’m making several choices: do I need to edit the prompt? Which of the four variations do I want further variations of? Do I need another roll of the same initial generation? I’m often making these decisions within seconds and I don’t worry too much if my decisions don’t produce the desired results, as I can always go back and produce alternatives. The choices we make further train the AI model, however the reverse is also true: our consumption and analysis of the images changes our own mental models and this affects our own intuition.

Design education is centered on optioneering: the production of alternatives that you can evaluate, select, and refine. The fact that this mirrors the process in Midjourney has probably helped to make it so addictive compared to other platforms. I’m optimistic that the ease of use paired with the similarity of the variation/selection/refinement workflow of AI softwares will help designers (and specifically early design students) become better designers.

The ecology of AI models and platforms is becoming more complex with each week. As was previously mentioned, Midjourney now uses Stable Diffusion’s model for their beta V4 model. So within one platform, we have access to multiple models that interpret the prompts in different ways. Similarly, each platform has programmed specific features that are unique to it. One of the most interesting features to be added recently has been the targeted editing of images in Dall-E, a third AI platform. Although I have not used Dall-E much due to both its high cost and overly-sensitive content restrictions, it does have the ability to erase parts of an imported or generated image and have the prompt focus only on that region. In the example above, the previously generated image from Stable Diffusion was imported into Dall-E and then two edits were made. First, the floating house in the tree was erased and the prompt “branches of a live oak tree” was used to fill in the erased region. Then the grass was erased and the prompt “a large crowd of people on a grass lawn” was used to populate the lawn.

Each model and platform provides a different partner with different capabilities. As always, it is the responsibility of the designer to understand their tools and know when switching between them might be more productive.

/imagine The interior of an inflated room made of translucent fabric. A geodesic spaceframe supports the fabric. A contemporary living room. --ar 1:2 --sameseed 31846 --iw (0.0 to 2.0 in steps of 0.2)

/imagine The interior of an inflated room made of translucent fabric. A geodesic spaceframe supports the fabric. A contemporary living room. --ar 1:2 --sameseed 31846 --iw (0.0 to 10.0 in steps of 1.0)

Dream! The interior of an inflated room made of translucent fabric. A geodesic spaceframe supports the fabric. A contemporary living room. -H 512 -W 1024 -S 1685008208 (Image transparency from 0 to 100 in steps of 10)

Technology, in its most ideal state, is emancipatory. It should lead to greater freedom, expanded capability, and less work. Unfortunately, the history of design technologies has not always resulted in such.

Although the introduction of computer-aided design in the 1980s helped designers produce work faster, it did not reduce the number of hours that architects worked. Over several decades, the architectural discipline has added waves of successive new technologies that supposedly would make architecture easier, yet we have become lost in a complex media landscape of conflicting file formats, steep learning curves, and tedious updates. It has become increasingly difficult for the professional designer (and even more so for the student) to navigate this landscape. Most of these new technologies are focused on the tail-end of the design process: production. Very few new technologies have focused on our ability to explore both our individual and the larger collective imagination. I do not expect AI text-to-image platforms to replace many of the design technologies we currently use because they offer something that is simply absent from our toolbox. They are easy to learn, playful, and visionary: the polar opposite of most of our design technologies.

In addition to text-to-image, many of the platforms have introduced some sort of image-to-image generation. This process uses an image in addition to a text prompt as the input for the AI. In many ways, this is the most useful to designers as it allows greater control over the overall composition, colors, and other properties of the generated image. In the examples above, the same image was used for each iteration but used a range of image weights (in Midjourney) or image transparencies (Stable Diffusion).[26]

However, Midjourney and Stable Diffusion differ in their current implementation of images as prompts. Stable Diffusion is more clear in that the image serves as the basis for the initial noise field. The user controls the transparency of the image prompt from 0% to 100%. A value of 0% essentially ignores the image while a value of 100% adheres so closely to the image prompt that there are barely any differences between it and the generated image.

Midjourney’s implementation is more opaque in that the generated image will never look exactly like the image prompt, although color ranges, composition, and certain geometrical features may be similar. Even at an image weight of 10 (the image prompt is valued 10 times the importance of the text prompt), the generated image only very roughly resembles the image prompt. This can be both frustrating and freeing: since you will never obtain an exact copy of the image prompt: no matter how strong the image weight, its purpose is more as a general vibe influence to the generated image.

/imagine a building facade loosely covered in gossamer translucent white fabric over bamboo scaffolding::2 Interior photograph of a living room and dining room::1 Texas Hill Country, mesquite trees, live oak trees. Tall dry grasses::1 morning light::1 architectural photography, landscape photography, editorial. DSLR, 16k, Ultra-HD, Super-Resolution, Exterior wide-angle, unreal engine 5, megascans, photorealistic::1 —ar 2:1

One of the existing tools in the architect’s tool box that AI image generators are most often compared with is architectural visualization software. There is the perception that, because AI text-to-image generators can make images that visually are similar to renderings, AI tools will somehow supplant both rendering technologies and the visualization artist. I think this is simply mistaking the ends for the means. Just because something appears like something else doesn’t mean they are identical, nor do they occupy the same territory in the designer’s process. In fact, I see digital rendering and AI text-to-image technologies as near opposites.

For everything that rendering excels at, AI is terrible, and vice versa. Despite the visual similarities of the end result, they perform in opposition and offer the designer completely different benefits.

While image-prompts are a way to gain greater control over the generated image, other tools have recently been added to aid in the editing of images (either AI-generated or not). Instead of generating a complete image, the techniques of in-painting and out-painting allow the user to make targeted edits to existing images. The techniques are essentially the same and differ only in the locus of the new image generation: either inside or outside the frame of the original image. With in-painting, the user selectively erases a portion of the image and then the AI fills it back in based on the text prompt and the surrounding image context. With out-painting, the user expands the canvas and targets an area outside of the existing image for the AI to extend based on the text prompt and neighboring context.

The image above was constructed from seven synthetic images made in Midjourney that were stitched together in Dall-E using out-painting. Once the long panoramic image was established, additional in-painting was done to add human figures occupying the spaces. This fictional interior panorama of the Dripping Springs house does not attempt to realistically represent the geometry of the house; after all, there is none at this time. Instead, the panorama constructs a narrative from garden to living room to kitchen to bedroom and back, in a dream-like state where one space bleeds into another.

!dream a building facade loosely covered in gossamer translucent white fabric over bamboo scaffolding. [various descriptions of interior or exterior rooms]. Texas Hill Country, mesquite trees, live oak trees. Tall dry grasses. Morning light. Architectural photography, landscape photography, editorial photography. DSLR, 16k, Ultra-HD, Super-Resolution, Exterior wide-angle, unreal engine 5, megascans, photorealistic.

If we review the advantages and disadvantages of both, this opposition will be clear. Renderings are digital simulations of precise geometric relationships and accurate lighting. They are able to capture the exact geometry of the design at specific times of day and year at the project’s geographic location. Multiple views of the same project or even animations of the project are commonplace. AI images can do none of this. The co-author of an AI image has little to no control over the view, geometry, or the spatiotemporal lighting. On the other hand, AI images appear in seconds; require no modeling, texturing, or lighting expertise; and often capture the atmosphere of a project with little effort. Rendering often occurs at the end of the design process, while I see AI image-generation tools being most used at the beginning.

Instead of attempting to fit AI image generation into the architectural visualization box, we should instead think of it as its own new tool that occupies a new territory.

AI text-to-image (and now image-to-image) tools feel the most like sketching as they combine the informal exploration of sketching with its acceptance of mistakes as creative opportunities. However, unlike sketching, AI images are able to capture the mood of a project much better and with little effort.

This construction of images is often a tedious and time-consuming task of beginning architects. I spent many frustrating and boring days throughout my career rendering projects, and then compositing into the images trees, humans, and every little detail that was too hard to model. Beyond full image generation, AI tools will become the default image editing tool this year. From removing backgrounds to adding in new details to images, AI tools greatly simplify the construction of images. Although some worry that AI tools will replace human visualization artists, I don’t believe this will happen.

Like all new technologies, artists will either find ways to subvert the technology by expressing themselves in ways that are impossible with AI, or will master the technology by constructing images that would be impossible without it.

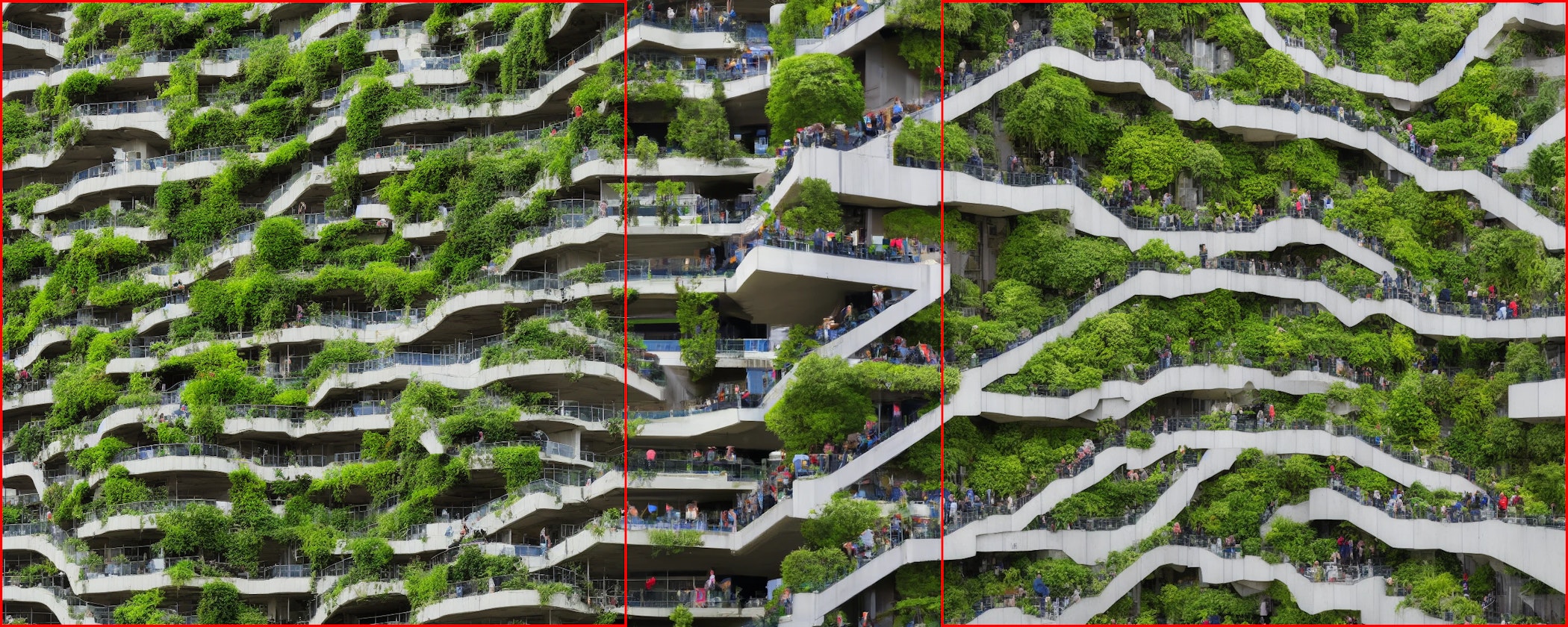

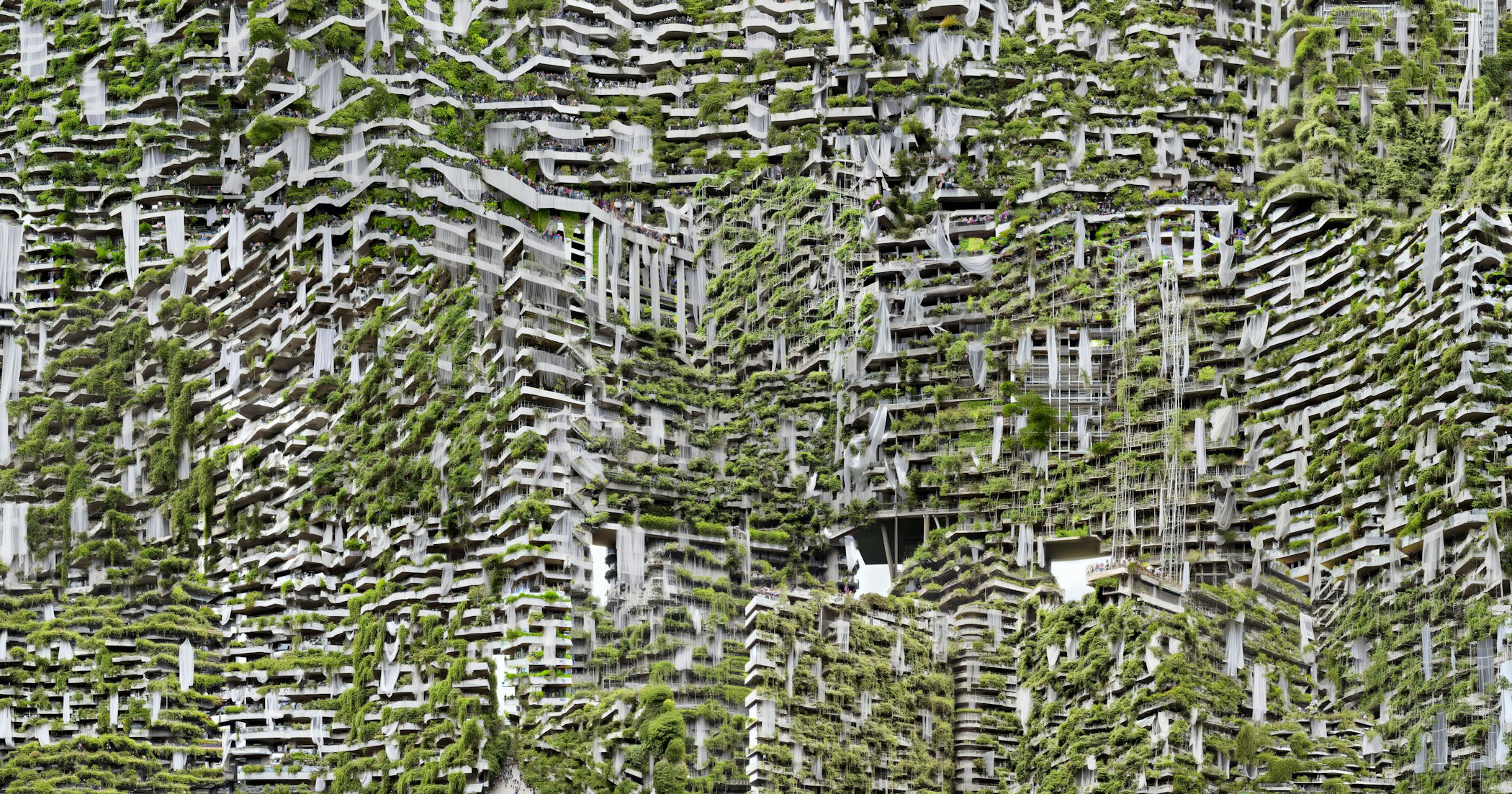

Two images of a total of eighteen images generated with the prompt: !dream A photo of crowds of people. Many people walking or standing on the levels. Bamboo scaffolding latticework. Horizontal courses of concrete. Geologic strata. Striated bands. The facade of a multistory building. Many staircases connect the layers. Lush and verdant hanging plants. Vertical gardens. Editorial Photography, DSLR, 16k, Ultra-HD, Super-Resolution, wide-angle, Natural Lighting, Global Illumination.

We end this narrative with a reflection on the labor of design and representation. Architecture is too difficult and it simply doesn’t need to be this way. The discipline has built up a culture that crushes the mental and physical health of its participants through an inordinate amount of work for little pay.